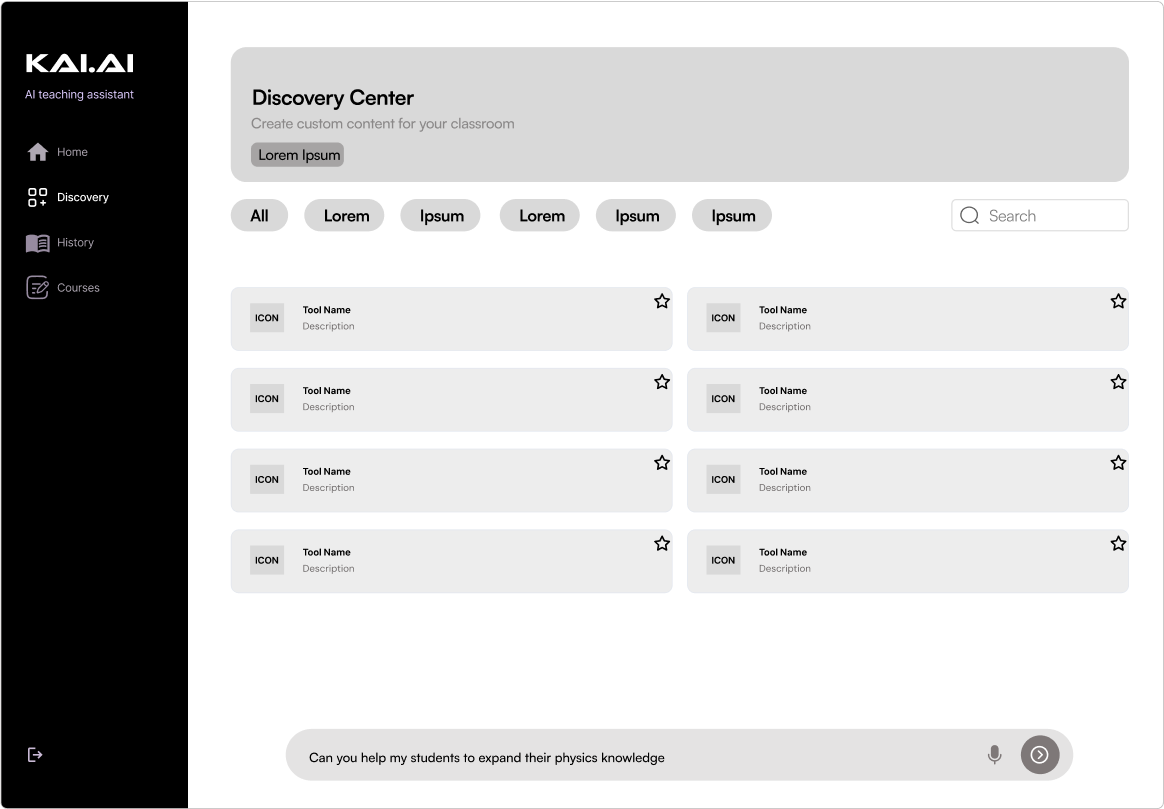

To help inform our next steps, I analyzed how other platforms approached discovery

Building on early user insights, I analyzed discovery flows from platforms like KhanMigo, Netflix, and Instagram to understand how they introduce features, guide users, and drive continued engagement. This allowed me to identify effective approaches that could be adapted for the KAI interface.